SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

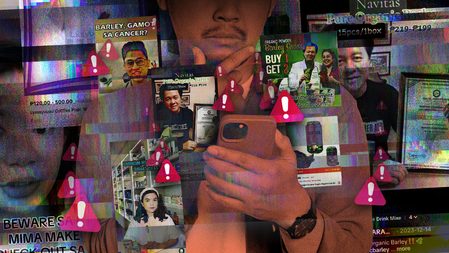

MANILA, Philippines – Following Rappler’s investigative story on how TikTok has emerged as a new marketplace for false medical information and unverified health products, the social media platform took down all the videos cited in the report.

In a story published on April 8, Rappler showed how various health-related videos containing exaggerated or downright false claims have flooded TikTok since the platform started incorporating e-commerce in its network and officially launched “TikTok Shop” in 2022. When Rappler reported these videos to TikTok on grounds that they violated the company’s own guidelines, the platform said it found no basis to take them down.

But on April 15, TikTok finally replied to our questions via email and said it has already taken down the 43 videos that we had previously reported to them for spreading health-related disinformation. TikTok said that it has also terminated certain accounts found to be propagating this type of content, including ALO DRUGSTORE, which Rappler exposed for marketing a gout supplement that advised users to take 25 tablets per hour.

According to TikTok, content on the platform goes through a machine review for possible guideline violations. If the machine spots “potential violation,” TikTok said, this “will be automatically removed, or flagged for additional review” by their trust and safety team. An “additional review will occur if a video gains popularity or has been reported.”

One of Rappler’s key findings is that TikTok’s report feature offers a limited selection of violations that can be considered basis for termination of the video or suspension of an account, making it difficult to push for takedowns of false health claims.

Livestreams are also inadequately monitored, allowing sellers to market unverified health products in the platform. When users inquire about product safety, they are often muted or blocked, an experience that Rappler also encountered during its investigation.

Lax implementation?

Data showed that from October to December 2023, a total of over 3.4 million videos in the Philippines have been taken down for violating TikTok’s community guidelines. Globally, over 176 million videos were taken down during the same period, with automated systems accounting for 128 million removals. Of these, 30% contravened TikTok’s regulated goods and commercial activities policies.

“More than 40,000 people work alongside technology to keep TikTok safe, and this includes dedicated teams and detection models to moderate LIVEs in real time. We [also] automatically block LIVE comments that our technology flags as likely [to be] violative,” TikTok said when asked how the platform monitors livestreams, specifically of sellers promoting unregistered products.

When asked why certain videos get to be retained despite them spreading disinformation, TikTok explained that “it is natural for people to have different opinions, but when it comes to topics that impact people’s safety, we seek to operate on a shared set of facts and reality.”

It stressed that medicines, supplements, and medical devices fall under restricted product categories. Sellers of these items must furnish various documents and product attributes, including, but not limited to, FDA certificates, registration numbers, product images, packaging details, expiration dates, ingredient lists, and evidence supporting any product claims.

It also clarified that “any item requiring prescriptions (i.e. prescription medicine, prescription supplements, and prescription medical devices) must not be sold via TikTok Shop under any circumstances.”

However, it’s apparent that this is not strictly implemented, since Rappler’s investigation showed that many sellers on the platform were still able to promote and post products despite the absence of these documents or requirements.

TikTok said that the platform does not “allow synthetic media of public figures if the content is used for endorsements or violates any other policy.” It’s worth noting that there are still users on the platform that use Artificial Intelligence to manipulate celebrity images and videos through “deepfake” to promote products in the platform.

TikTok, however, gave assurances that it continues to “invest heavily in training technology and human moderators to detect, review, and remove harmful content.” This is aimed at improving procedures and enabling quicker and more efficient responses.

TikTok asked users to report violations in-app or on their website if they come across harmful content on the platform. – Rappler.com

Add a comment

How does this make you feel?

![[OPINION] Badoy’s red-tagging and freedom of expression](https://www.rappler.com/tachyon/2024/04/20240426-Badoy-red-tagging-freedom-expression.jpg?resize=257%2C257&crop_strategy=attention)

There are no comments yet. Add your comment to start the conversation.